GPT-5 reasoning ability represents a significant advancement in artificial intelligence, offering enhanced capabilities in evaluating and processing complex information. As we delve into the latest iterations of GPT-5 models, it becomes evident that their ability to perform tasks such as pattern recognition and lateral thinking surpasses previous generations. Specifically, AI reasoning evaluation frameworks have shifted focus to not just recall facts but to engage in multi-step inference and contextual reasoning, drawing on cultural nuances and abstract connections. This is particularly useful in benchmarking AI systems against challenging games like Only Connect, which prioritize clever reasoning and decision-making. By refining these reasoning models, developers open new avenues for multi-agent orchestration systems and intelligent applications across industries.

The exploration of GPT-5’s cognitive functions unveils a broader landscape of AI capabilities, often referred to as advanced reasoning systems. These state-of-the-art reasoning models emphasize intricate problem-solving techniques, facilitating better interpretation of complex data through enhanced cognitive processes. In this context, AI reasoning assessments, including innovative evaluations akin to interactive challenges like the Only Connect game, allow us to witness the depth of an AI’s understanding. By focusing on tasks that demand creativity and connection-making, we observe how these systems can effectively navigate ambiguity and provide insightful answers. Such developments not only enhance user experience but also push the boundaries of what’s possible in AI-driven technologies.

Understanding GPT-5 Models and Their Reasoning Capabilities

The GPT-5 models have been heralded as a significant advancement in AI reasoning evaluation, particularly when tasked with complex problem-solving scenarios. Unlike their predecessors, they incorporate advanced pattern recognition techniques that enable them to assimilate information and draw connections in ways that mimic human-like reasoning. These models utilize a variety of parameters, including verbosity and reasoning depth, which can be adjusted to enhance their performance in lateral thinking tasks. Such capabilities are crucial for applications that require nuanced decision-making, such as multi-agent orchestration systems.

In the context of Only Connect, a game that embodies the essence of creative problem-solving and abstract reasoning, GPT-5 models demonstrate their superior abilities. Contestants must not only recall facts but also discern intricate relationships between clues, which serves as an ideal benchmark for evaluating AI reasoning. As we analyze the effectiveness of GPT-5, we note how these models excel in tasks requiring multi-step inference, setting a new standard for future AI developments.

The Role of Lateral Thinking in AI Evaluation

Lateral thinking plays a pivotal role in assessing AI models like GPT-5, especially when applied to challenges inspired by the Only Connect game format. This approach emphasizes moving away from traditional logical sequences to explore unconventional solutions, thereby broadening the landscape of possible answers. By evaluating models through rounds that prioritize pattern recognition and creative problem-solving, we uncover the depth of reasoning capabilities embedded within AI architectures. Such evaluations not only highlight the models’ proficiency in connecting disparate clues but also provide valuable insights into their cognitive strengths and weaknesses.

Moreover, lateral thinking promotes a style of reasoning that validates the adaptability of AI systems to unexpected scenarios. This becomes particularly apparent in the game’s ‘Wall’ round, where AI models must categorize 16 elements effectively. The challenges posed here reveal how models like GPT-5 handle complexity and abstraction, marking significant strides in AI reasoning assessment. The insights gained from these evaluations inform future improvements, ensuring that AI continues to evolve alongside human reasoning methods.

Evaluating Reasoning Ability with Only Connect

Evaluating reasoning ability in AI models through the Only Connect game illustrates the importance of contextual understanding and cultural references. The structured nature of this game allows us to apply specific metrics to gauge how well models comprehend and engage with complex query patterns. GPT-5’s design enables it to navigate these layers of meaning with ease, providing evaluators with a clear picture of its cognitive processes. By examining models’ performance in identifying connections and completing sequences, we shine a light on their latent semantic indexing capabilities.

This method not only assesses accuracy but also the approach taken by AI when forming responses. For instance, models that perform well in the ‘Missing Vowels’ round demonstrate speed and efficiency, whereas those struggling in the ‘Wall’ round indicate areas where further development is needed. This comprehensive evaluation framework underscores the need for ongoing refinement in AI reasoning evaluation, ensuring that each new iteration, particularly with advancements like GPT-5, continues to push the envelope of what is possible in artificial intelligence.

The Impact of Verbosity on AI Performance

Verbosity, as a parameter, significantly influences the performance dynamics of GPT-5. Previous assessments revealed that while verbosity levels could alter token usage, they presented minimal impact on the overall accuracy of responses. High verbosity sometimes yields richer contextual outputs, which, in complex reasoning tasks, can enhance the model’s performance by providing deeper insights or clearer connections between clues. In contrast, lower verbosity can compel the model to focus on more concise answers, potentially sacrificing nuance for brevity.

Within the framework of Only Connect, the adjustments in verbosity levels led to interesting patterns in model performance. While GPT-5 maintained effectiveness regardless of verbosity settings, some other models showed more dramatic fluctuations. Such findings advocate for optimized parameter configurations in future AI reasoning tasks, ensuring that models can be fine-tuned to maximize their analytical capabilities without compromising their ability to generate accurate and contextualized outputs.

Token Efficiency in AI Reasoning Models

An essential aspect of evaluating reasoning models like GPT-5 is examining their token efficiency. As AI systems generate responses, the number of tokens utilized can directly affect both computational costs and processing times. GPT-5 models, equipped with high reasoning parameters, often incur higher token usage; however, their performance efficiency reflects a strong ability to engage with complex problem-solving tasks. The analysis reveals that models striking a balance between token costs and reasoning effectiveness can outperform others, making this an essential area of focus in AI development.

In our evaluation of the Only Connect game, we observed that the models which consumed more tokens tended to deliver superior accuracy in their responses. The correlation between token usage and performance highlights the need for ongoing research into optimizing AI architectures for better resource management. As we continue to refine AI systems like GPT-5, it is crucial to develop strategies that enhance reasoning efficiency while minimizing operational costs, thereby supporting broader applications across various domains.

Challenges of the ‘Wall’ Round in AI Reasoning

The ‘Wall’ round in the Only Connect game presents unique challenges that test an AI model’s capacity for complex reasoning and associative thinking. This round requires contestants to classify a collection of 16 elements into coherent groups, a task that demands not just recall but deep pattern recognition and contextual evaluation. GPT-5 and other reasoning models often display a wide range of performance levels in this round, reflecting their ability to disentangle complex information while establishing relevant connections.

AI models, particularly GPT-5, showcase variability in effectiveness during the ‘Wall’ round. The high degree of complexity and the need for creative problem-solving can lead to gaps in performance among different models. However, GTP-5’s advanced algorithms tend to excel, demonstrating remarkable cognitive capabilities. This reality necessitates further testing and refinement, as enhancements in reasoning skills directly contribute to improved outcomes in time-sensitive scenarios like competitive game formats.

Next Steps in AI Reasoning Evaluation

As we look ahead, the next steps in AI reasoning evaluation include the comprehensive compilation and analysis of data collected from the benchmark tests, particularly those based on the Only Connect framework. Publishing this dataset will not only showcase each model’s performance but will also identify which specific questions posed greater challenges for various reasoning systems. Such transparency is vital for ongoing development and refinement of AI models, including GPT-5, as it allows researchers to target weaknesses more effectively.

In addition to analyzing existing performance, our approach will also emphasize creating realistic competitive formats for AI models, fostering an environment where they can be actively compared and contrasted. This will enhance understanding of strengths and weaknesses across different models, and the insights gained can inform future iterations of reasoning capabilities to build even more powerful AI systems. Advancing in this field will pave the way for future breakthroughs in AI reasoning, ensuring that development keeps pace with the increasing complexity of tasks in contemporary settings.

Ingram’s Contributions to AI Research

Ingram stands as a pivotal player in the evolving landscape of AI research and development. Established in 2022, the organization’s mission is to explore cutting-edge AI technologies while ensuring compliance and openness, particularly in light of the increasing scrutiny surrounding artificial intelligence systems. By targeting solutions that cater to startups and SMEs, Ingram is fostering a collaborative environment that encourages innovative explorations in AI, especially in dynamic markets where rapid adaptability is crucial.

As we continuously refine our methodologies and assessment frameworks, Ingram remains committed to staying at the forefront of AI advancements. By partnering with experts in the field and leveraging groundbreaking theories and technologies, we aim to push the boundaries of what AI models like GPT-5 can achieve. This commitment not only serves the immediate technology landscape but also contributes to the broader dialogue on the ethical implications and future potential of AI in society.

Frequently Asked Questions

What are the reasoning abilities of GPT-5 models?

GPT-5 models exhibit advanced reasoning abilities that include pattern recognition, lateral thinking, and multi-step inference, allowing them to process complex information and make informed decisions based on context.

How does GPT-5 compare to previous reasoning models?

While earlier models like GPT-3 and GPT-4 demonstrated strong reasoning capabilities, GPT-5 has shown significant improvements in both decision-making speed and accuracy, especially in tasks involving contextual reasoning and abstraction.

What is the significance of the ‘Only Connect’ game in evaluating AI reasoning?

The ‘Only Connect’ game serves as an ideal benchmark for evaluating AI reasoning abilities, emphasizing clever connections and pattern recognition over rote memorization, making it an effective test for models like GPT-5.

How does GPT-5 handle challenges in reasoning tasks like those in ‘Only Connect’?

GPT-5 excels in reasoning tasks from ‘Only Connect’ by analyzing clues effectively, making educated guesses, and utilizing various reasoning strategies to enhance performance, even in complex scenarios like the Wall round.

What parameters were used to evaluate the reasoning abilities of GPT-5?

We evaluated GPT-5 using parameters such as reasoning effort, verbosity, and token usage to measure its effectiveness in processing information and generating accurate responses.

Why is lateral thinking important in AI reasoning models like GPT-5?

Lateral thinking enhances GPT-5’s reasoning by allowing it to approach problems creatively, enabling it to find connections between seemingly unrelated pieces of information—crucial for complex reasoning tasks.

What findings emerged from testing GPT-5 against tasks from the ‘Only Connect’ game?

Testing GPT-5 on ‘Only Connect’ tasks revealed its superior performance in recognizing patterns and making decisions under pressure, particularly excelling in the Missing Vowels round and facing challenges in the Wall round.

How does token usage impact the efficiency of GPT-5 in reasoning tasks?

While GPT-5’s reasoning models require higher token usage, they demonstrate enhanced effectiveness and accuracy, suggesting a balance between resource consumption and reasoning capability in AI.

What are the future directions for testing reasoning models like GPT-5?

Future testing will involve creating competitive formats that pit models against each other, assessing their performance across various reasoning tasks and identifying challenges to improve AI development.

How do GPT-5 models make judgment calls during reasoning evaluations?

GPT-5 models utilize their reasoning capabilities to make judgment calls by deciding whether to rely on available clues or request additional information through structured output parameters, optimizing their decision-making process.

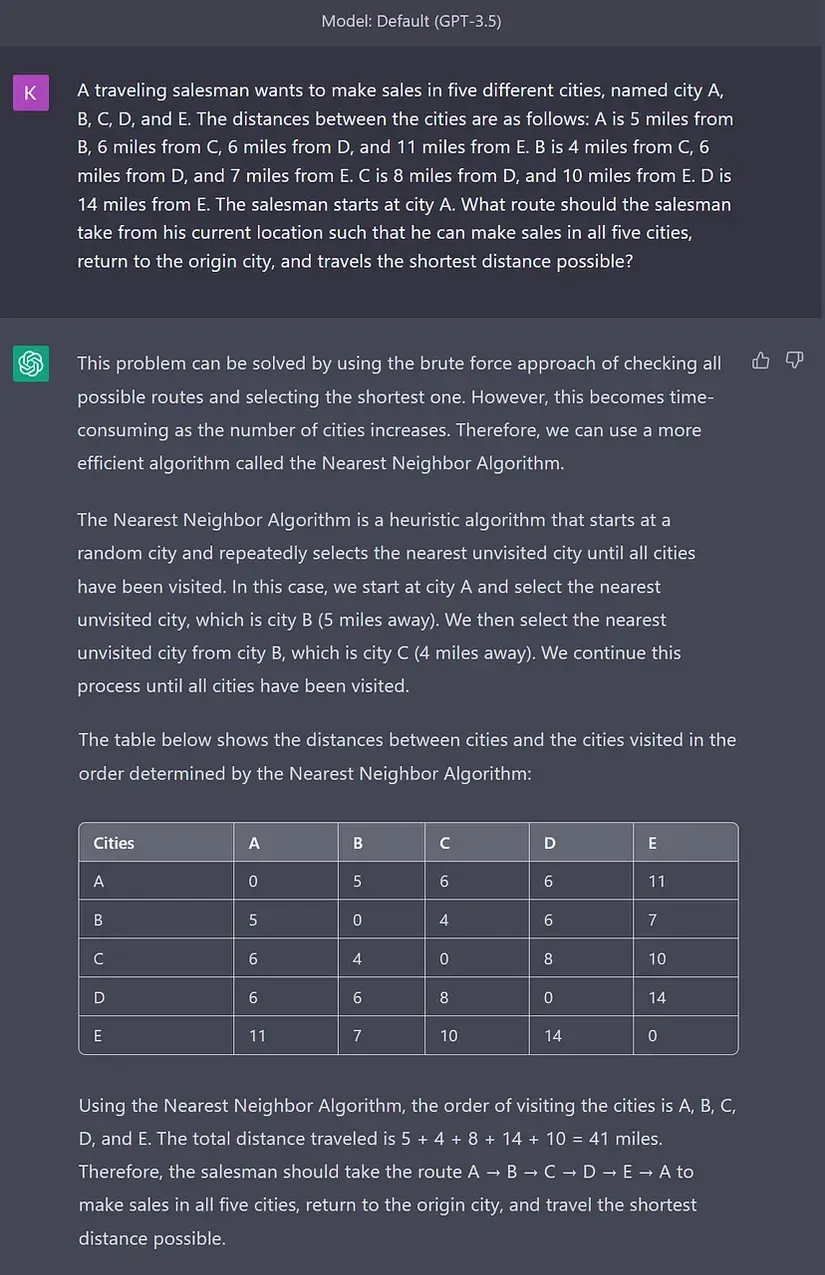

| Aspect | Details |

|---|---|

| Purpose of Evaluation | Assess reasoning abilities, quality of decision-making, and improvements in GPT-5 models. |

| Key Reasoning Skills Tested | Pattern recognition, lateral thinking, abstraction, contextual reasoning, multi-step inference. |

| Benchmarking Tool | Only Connect game, focusing on clever reasoning instead of mere knowledge recall. |

| Selected Models | GPT-3, GPT-4-Mini, GPT-4.1, Claude Sonnet 4, Opus 4, Opus 4.1, and different configurations of GPT-5. |

| Performance Outcomes | GPT-5 models outperformed others in accuracy; verbosity affected token usage but not accuracy. |

| Challenging Rounds | The Wall round proved most difficult, while Missing Vowels was the easiest. |

Summary

GPT-5 reasoning ability showcases significant advancements in AI model performance, particularly in complex reasoning tasks. This evaluation highlights the importance of reasoning skills like pattern recognition and lateral thinking, which are crucial for enhancing decision-making capabilities in AI applications. By benchmarking against challenges designed to test these skills, such as the Only Connect game, researchers can better understand the strengths and weaknesses of different models, particularly the newer GPT-5. This ongoing analysis is pivotal for improving future models and pushing the boundaries of AI research.

#GPT5 #AIReasoning #ModelEvaluation #AIResearch #MachineLearning