Content Scraping: Unlocking Data from Websites

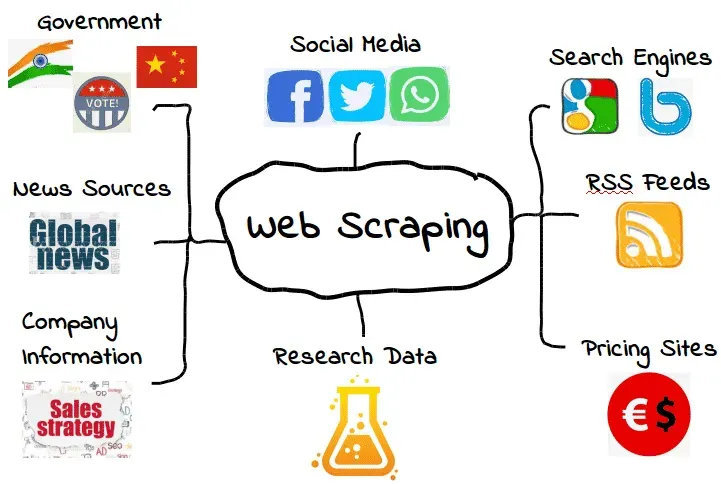

Content scraping has become an essential technique in the world of data extraction, enabling businesses to collect valuable information from various online sources. This practice involves using web scraping tools to automate the process of scraping content from websites, allowing users to gather data efficiently and accurately. By employing HTML content extraction methods, organizations can tap into a wealth of information that would otherwise remain hidden. As digital landscapes continue to evolve, the importance of effective data scraping strategies cannot be overstated. Whether for market research, competitive analysis, or content curation, mastering website data extraction is crucial for staying ahead in today’s fast-paced online environment.

When we talk about gathering information from the internet, we often refer to it by various names, such as data mining or web harvesting. These techniques encompass a range of methods for collecting information, drawing from multiple online platforms to build comprehensive datasets. With advancements in technology, the ability to perform HTML data extraction has become more accessible to both businesses and individuals alike. By leveraging cutting-edge web scraping tools, enthusiasts can enhance their research capabilities and streamline workflows. Regardless of the terminology used, the underlying principle of efficiently acquiring information from digital sources remains the same.

Understanding Content Scraping

Content scraping, often referred to as web scraping, is the process of extracting information from websites. This process is accomplished using various web scraping tools that automate the retrieval of data from online resources. Many users leverage scraping to gather large volumes of information efficiently, whether it’s for data analysis, creating databases, or simply for personal use. The technology allows users to bypass the tedious task of manual data entry by automating the extraction from HTML content.

Despite its usefulness, content scraping brings forth ethical and legal considerations. Many websites have terms of service that explicitly prohibit scraping, making it crucial for users to understand the implications of their actions. Additionally, the methods used for data scraping can vary significantly, from simple copy-paste techniques to sophisticated software applications designed specifically for HTML content extraction. Scrapers can capture everything from text and images to metadata and other relevant site information.

Essential Web Scraping Tools

When it comes to data scraping, there is a myriad of tools available that can simplify the process. Tools like Beautiful Soup and Scrapy, designed for Python developers, are excellent for those looking to dive into custom web scraping projects. These tools facilitate HTML content extraction by providing robust libraries that can navigate and parse HTML documents, making them invaluable for data scientists and web developers alike.

For non-programmers, user-friendly web scraping tools like Octoparse and ParseHub offer an interface that allows users to scrape content from websites without deep technical knowledge. These tools provide drag-and-drop features enabling users to select data and automate their collection effortlessly. Choosing the right tool largely depends on the user’s technical skills and the complexity of the data scraping task at hand.

The Process of Scraping Content from Websites

Scraping content from websites involves several key steps, starting with identifying the target site and determining the type of data needed. Users often begin by inspecting the website’s HTML structure to find the relevant elements that contain the desired information. Once identified, they can use a scraping tool to automate the process, essentially instructing the software which parts of the page to extract.

Once the data scraping process is completed, the extracted information usually requires further cleaning and organization. This includes removing duplicates, standardizing formats, and possibly transforming the data into a more usable format, such as CSV or JSON. It is vital to ensure that the data is accurate and relevant, which often entails additional programming or manual oversight to verify the integrity of the scraped content.

Data Privacy and Ethical Considerations in Web Scraping

As web scraping becomes more prevalent, the associated ethical and legal considerations continue to gain attention. Many websites enforce strict rules regarding data collection to protect user privacy and their intellectual property. It’s crucial to familiarize oneself with a site’s terms of service before initiating scraping, as violating these terms can lead to IP bans or legal action.

Moreover, ethical scraping practices include respecting the site’s robots.txt file, which indicates which areas of the website are off-limits to automated bots. Engaging in responsible scraping ensures that users can extract the necessary information without compromising the rights and privacy of the site’s owners or users. Understanding these responsibilities will not only maintain the integrity of the scraping process but also the reputation of those who engage in it.

HTML Content Extraction Techniques

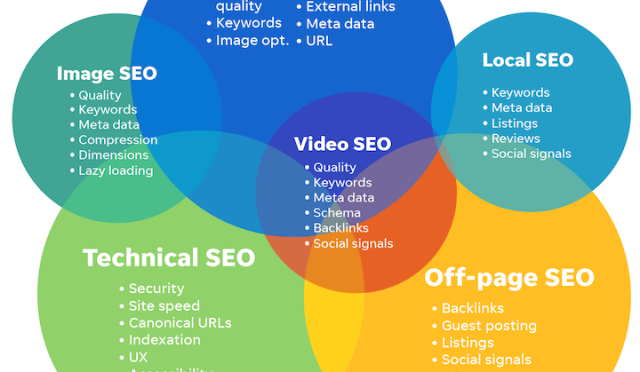

HTML content extraction is a foundational skill for anyone involved in data scraping. Techniques vary widely, depending on the requirements and the tools used. For example, regular expressions can quickly identify specific patterns within HTML tags, while Document Object Model (DOM) parsing allows detailed navigation through the hierarchical structure of HTML documents.

Another effective method for HTML content extraction is utilizing APIs provided by websites. Many sites offer structured methods to retrieve data, allowing users to bypass traditional scraping methods entirely. While APIs can be incredibly efficient, it’s essential to recognize that not all websites have them, which often leads scraper developers to default back to parsing HTML manually.

Building Custom Web Scraping Solutions

For advanced users who require tailored solutions, building a custom web scraper can be an ideal approach. This process typically involves using programming languages like Python, combined with libraries such as Beautiful Soup or Selenium to interact with web pages dynamically. Custom scrapers allow for fine-tuning how and what data is collected, adapting to changes in site layouts or structures as needed.

Developers can also implement scheduling and automation features in their custom solutions, enabling data to be collected continuously or at specific intervals. This flexibility makes custom web scraping solutions ideal for applications such as market research, content aggregation, and competitive analysis, where timely data retrieval is crucial.

Challenges Faced in Web Scraping

While web scraping provides significant advantages, it also presents a set of challenges. One common issue is handling websites with dynamic content generated by JavaScript, which traditional scraping tools may struggle to extract accurately. In such cases, approaches like headless browsers can be employed to render the full page, allowing for effective data scraping even from client-side rendered web applications.

Another challenge is navigating anti-scraping measures implemented by websites. Many sites utilize CAPTCHAs, IP rate limiting, or require login credentials, complicating the scraping process. Strategies to overcome these barriers include rotating IPs, using various user agents, and leveraging proxies. However, these tactics must be applied judiciously to avoid breaching legal or ethical guidelines.

Future Trends in Web Scraping

The future of web scraping appears promising, especially with the rise of artificial intelligence and machine learning technologies. Upcoming tools will likely leverage these advancements to offer more nuanced data extraction capabilities, automatically adapting to website changes and improving accuracy. Enhanced user interfaces may also emerge, allowing non-technical users to harness the power of data scraping without extensive programming knowledge.

Furthermore, as businesses increasingly rely on data-driven decision-making, the demand for effective web scraping solutions will continue to grow. Innovations in data processing and storage will create more opportunities for users to extract and analyze vast datasets efficiently. Ultimately, the evolution of web scraping tools will undoubtedly shape the landscape of data collection and analysis in the years to come.

Legal Framework Surrounding Data Scraping

Understanding the legal framework surrounding data scraping is essential for developers and businesses engaged in this practice. Various countries have different laws regulating how data can be collected, used, and disseminated, and navigating these regulations can be complex. Additionally, court rulings on landmark cases can offer insights into how data scraping is interpreted in legal terms, potentially influencing future operational practices.

Developers must remain vigilant about respecting copyright laws and privacy regulations, particularly as the digital landscape evolves. By incorporating best practices and maintaining transparency in their scraping activities, organizations can mitigate potential legal risks and establish a reputation for ethical data practices. Engaging with legal counsel when designing scraping operations can provide additional protection and clarity in navigating these waters.

Frequently Asked Questions

What is content scraping and how can it be beneficial for data extraction?

Content scraping, or web scraping, involves extracting data from websites using various web scraping tools. It allows users to collect large amounts of website data, which can be useful for market analysis, research, and competitive intelligence.

What web scraping tools are recommended for efficient content scraping?

Several web scraping tools are effective for scraping content from websites, including Beautiful Soup, Scrapy, and Octoparse. These tools facilitate HTML content extraction and streamline the data scraping process.

Is it legal to scrape content from websites without permission?

The legality of scraping content from websites depends on the site’s terms of service and local laws. Always check the website’s policy before engaging in website data extraction to avoid potential legal issues.

What are the main challenges when scraping content from websites?

Common challenges in content scraping include handling dynamic content, dealing with CAPTCHAs, and respecting robots.txt files. Additionally, some websites implement anti-scraping measures that can hinder data scraping efforts.

How can I optimize my data scraping process for better results?

To optimize your data scraping process, use appropriate web scraping tools, ensure clean data extraction methods, and implement strategies to manage IP bans. Always confirm the HTML layout to improve accuracy during content scraping.

What techniques enhance the accuracy of HTML content extraction?

Techniques that enhance the accuracy of HTML content extraction include using XPath or CSS selectors to target specific elements, handling pagination correctly, and employing data validation checks after scraping.

Can content scraping be automated, and if so, how?

Yes, content scraping can be automated using scripts written in languages like Python, combined with libraries such as Scrapy or Selenium. Automation allows for scheduled scraping and efficient data collection over time.

How do I handle ethical considerations when scraping content from websites?

When scraping content from websites, consider ethical practices such as respecting the site’s robots.txt file, avoiding overload on servers, and properly attributing the source of the data when using extracted information.

| Key Point | Description |

|---|---|

| Website Access Limitations | The inability to access external websites for scraping content. |

| User Input Requirement | Users must provide HTML content from a specific post or article for analysis. |

Summary

Content scraping refers to the process of extracting data from web pages, but as mentioned, I cannot perform this directly from websites such as barrons.com. Instead, I can assist in analyzing any HTML content that you provide. This highlights the importance of user involvement in the content scraping process, enabling tailored insights based on the specific information shared.

#ContentScraping #WebDataExtraction #DataMining #TechInsights #WebAutomation